Published on HPCWire written by Oliver Peckham

Weather and climate applications are some of the most important for high-performance computing, often serving as raisons d’être and flagship workloads for the world’s most powerful supercomputers. But next-generation weather and climate modeling remains extraordinarily difficult, owing both to the enormity of the task and to the difficult-to-evolve code that has been developed to face that task over the course of many years.

Boulder-based startup TempoQuest is working to shoulder some of that burden, porting major parts of one of the most prominent weather codes – the Weather Research and Forecasting (WRF) Model – to GPUs. HPCwire recently spoke to Christian Tanasescu, COO and CTO for TempoQuest, about their new weather model.

WRF’s need for speed

Today’s WRF model, Tanasescu said, is the product of several decades of development led by the National Center for Atmospheric Research (NCAR) and the National Oceanic and Atmospheric Administration (NOAA).

“It grew over time, achieving today a level of maturity that makes it one of the most-used codes, in particular for regional models,” he said, adding that larger nations tend to have their own global models. “But when it comes to commercial usage – like renewable energy, agriculture, aviation, logistics, unmanned vehicles … What they need is very, very high-resolution, precise and hyper-local forecasts. You don’t get it from the government, from the National Labs.”

WRF fills many of those needs, with tens of thousands of users across more than 160 countries. But there’s a problem: as an immeasurably complex compendium of Fortran-based code, WRF has not kept up with the latest and greatest in hardware acceleration, and is predominantly run on CPU-based systems.

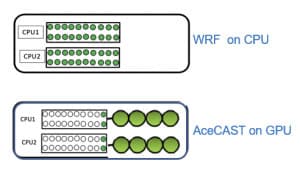

Tanasescu said that there have been many efforts to accelerate WRF models at organizations like NCAR and NOAA – but added that these efforts focused on accelerating parts of the weather models. “Because you shuffle the data back and forth between CPUs and GPUs and essentially, you execute part of the job on CPU, it means it runs in hybrid mode,” he said. “And hybrid mode is not the most effective.”

Enter TempoQuest. Tanasescu said that he and his colleagues noted the movement towards accelerators some years ago and saw an opportunity in the weather and climate model space. Now, TempoQuest is entering a market dominated by government organizations and open-source applications as an independent software vendor (ISV) that is “striving to take the models developed in National Labs to the most powerful supercomputers these days.”

GPU WRF, alias AceCAST

After a multi-year development effort, the TempoQuest team produced what they call “GPU WRF” when talking to the scientific community or “AceCAST” when talking to commercial customers. TempoQuest refactored WRF’s dynamical core, along with other key elements of the model, to build a new, accelerated application that runs “entirely on GPU – exclusively on GPU,” Tanasescu said. “It’s probably the only code in weather and climate that has achieved this level of porting to GPUs.”

During development, the TempoQuest team had made use of an NCAR study that identified the most-used elements of WRF’s structure, which broadly relies on a set of physics schemes and sets of options within those physics schemes.

“Based on that study, we prioritized the physics to be ported on GPU,” Tanasescu said. “We ported the top three options for each major scheme – or four, in some cases; with this set of supported physics scheme options, we can run over 85% of most weather forecasts used today.”

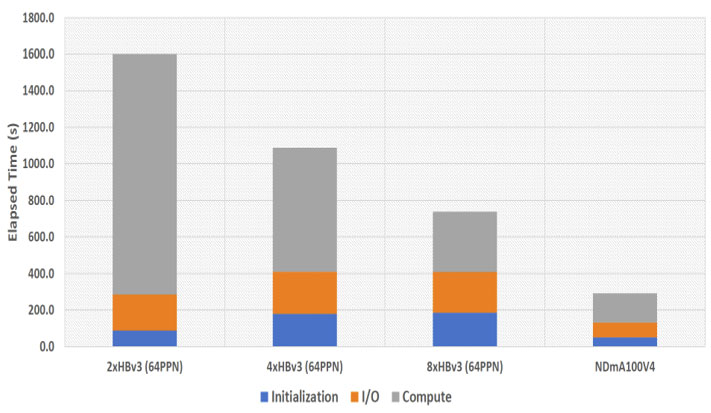

While this does mean that AceCAST is a subset of WRF – not a full port – Tanasescu says that for those 85% of forecasts, the model more than holds its own. “Ballpark,” he said, AceCAST is around 10-16× faster than WRF on a node-to-node basis, holding resource cost constant against a four-GPU system. Around the 10× side of that range, AMD’s Epyc “Milan” CPUs; around the 16× side, Intel CPUs.

If you hold performance constant, Tanasescu said, the cost to run CPU WRF is about three times higher. Alternatively, holding runtime and cost constant, users could increase resolution with AceCAST; “advanced” WRF models typically run at 3km resolution, Tanasescu said, while WRF can use the same resources to run at 1km or less. “We’ve seen a much better correlation with the ground truth” by doing this, Tanasescu added.

Most of the physics options in AceCAST were ported to OpenACC, while six “computationally expensive” options are in CUDA. Tanasescu said that NCAR updates WRF about twice a year, making it easy for TempoQuest to keep up. Right now, he said, AceCAST supports “essentially any [x86] Linux cluster that has Nvidia GPUs”; the company is “very close to release on AMD GPUs as well” – including the MI250X GPUs that power the exascale Frontier system, among other major supercomputers. AceCAST uses just one CPU core per GPU in the system, and only for job initialization and I/O tasks.

Tanasescu says that TempoQuest also plans to support Intel’s Max series GPUs (née Ponte Vecchio) for use on Argonne National Laboratory’s forthcoming exascale Aurora supercomputer. He said that Argonne researchers have been using ensemble runs of GPU WRF in their climate studies and cited a previous collaboration with Argonne and Nvidia in which a version of the model was able to scale across 512 nodes (about 3,000 GPUs) on Oak Ridge National Laboratory’s Summit supercomputer. (That kind of scaling is extreme for TempoQuest, with Tanasescu saying that most AceCAST jobs use somewhere between four and 12 GPUs.)

TempoQuest’s strategy

Market adoption has been a challenge for TempoQuest. Tanasescu said that as a small startup, “lack of visibility” was a problem for them (“Very few people know that such a capability exists!”). He added that stakeholders like the National Labs and NOAA are used to open-source applications, making AceCAST’s proprietary nature a barrier for adoption, and that limited access to GPUs from smaller customers has been another barrier – though, on the other side of that coin, he said that the pressure on system operators to find applications for GPU-heavy flagship supercomputers has been a boon.

For commercial customers, Tanasescu said that AceCAST is offered directly as a software subscription and through a cloud-based software-as-a-service (SaaS) model that runs on AWS and Rescale. TempoQuest also created an automated modeling workflow (AMF) that serves as a “kind of wrapper around AceCAST,” which handles functions that are necessary or desirable for a forecasting workflow, but which are not included in WRF (e.g. preprocessing, grid generation and visualization). Tanasescu said that TempoQuest’s renewable energy clients use AceCAST to forecast wind profile and solar irradiance for energy production estimates. TempoQuest is also working with satellite companies to incorporate proprietary satellite data into weather models to support use cases like hyper-local forecasts for spaceport launches.

Tanasescu did add that TempoQuest is considering whether or not to loosen its closed-source status, citing ongoing discussions with government labs. But, for now, TempoQuest remains – in Tanasescu’s words – “the only true HPC ISV in weather and climate.”